Kristin: Welcome, Gopi, so nice to have you.

We increasingly hear about AI in the news, almost like there’s an arms race. There’s this AI race, and it’s likened to whichever nation winds up with the most powerful AI will have an advantage over others. AI is increasingly being used in all manner of applications and areas of life, so I guess where I’d like to start is have you just share your definition of what artificial intelligence actually is.

Gopi: Ok, so my definition of artificial intelligence would be something akin to being a material rigidification of a thought process. It is not something that is a machine thinking. But it is our thinking that we rigidify to the point that it is then taken up by the machine and repeated over and over again.

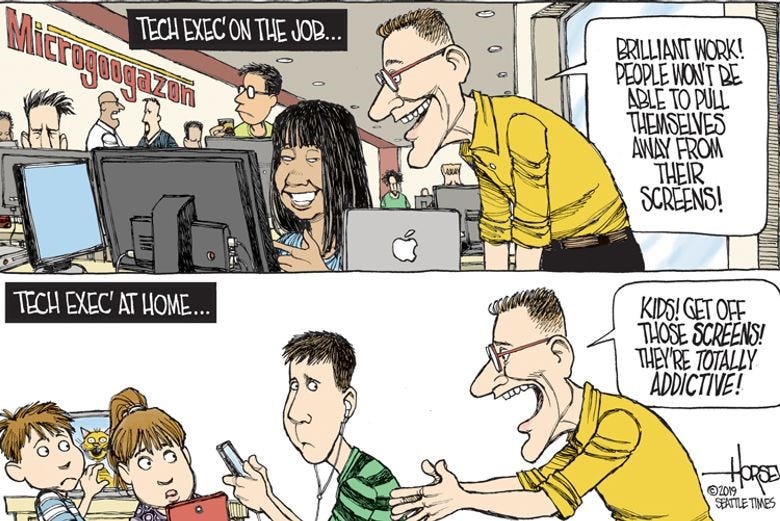

So one thing that I noticed is people don’t understand how this enthusiasm for AI kicks in. How do people keep working on it? How do people keep building on it layer upon layer upon layer over the last several decades? One of the fundamental driving forces — or the fuel, if you will — of this whole topic of AI is the laziness of human thinking.

This is the fundamental driving force. There’s enormous fuel there. Nobody can argue against it. It’s an unending supply because wherever you feel that you don’t want to think over a situation again, you want to automate it. Wherever you automate, then you want a machine to repeat it. Wherever you want to do that, it’s a matter of setting up the right logical pathways. Even the logical pathways are no different than setting up a domino board. People do not realize that there is no actual difference conceptually between a domino board and a super highly powerful AI right now.

In terms of the functioning of it, it still works on the basic semiconductor hardware. It still works on the on-and-off situation, which, in the domino situation, is the standing and falling. It’s that same idea. Conceptually there is no difference. What is different is the ability of these things to be layered one on top of the other. The biggest layer is the enormous advances in semiconductor technology itself. This helps greater and greater miniaturization, which in turn allows for greater and greater storage space.

Due to brute force, you can get your things working which would not have been possible before. It’s a combination of brute force, technological progress in terms of miniaturization, and the laziness of thinking at the human level. All these three create a perfect storm for the next big wave of AI development. This has been the trend right from the days of the calculator all the way until today, ramping itself up every year. The seed of the definition of AI is in these concepts: the human aspect, the machine aspect, the technical aspect.

Kristin: In the past I’ve heard you talk about the evolution of technology and certain specific technological devices or advances in terms of them either being an extension of, or replacement for, certain capacities within the human being. Can you give some historical examples just for context and then return to the picture of AI in relationship to thinking?

Gopi: Yeah, in general any activity, any human activity, if the effort involved is to be reduced, that is where we have technologies kick in. For instance, one of the key things is all our limb activities. All our physical hand and leg — our exertion activities — when they are taken over by devices outside, that is in a way the first stage of it. Then you have things altering and changing. During the time the Industrial Revolution was kicking in, what happened was a mimicking of what happens inside the human being between the digestive and the respiratory systems. What happens in a regular cycle became the engine. It is interesting to see how the materializing of the human process is expressed in the engine. Then it’s used as a prototype to in turn explain how the human functions work.

So, people think of their own bodies as engines. They don’t realize then, like narcissists, they are falling in love with their own picture. The picture that they themselves have created. So the engine is where you put in fuel. There is burning, then there is an expansion and a contraction and an expansion and a contraction which in turn creates the motion and rotation which literally powered the entire British East India expansion. The British Empire was basically running on this particular process. It is very similar to how physiology later came to view the human being, the eating function, the breathing function, and everything.

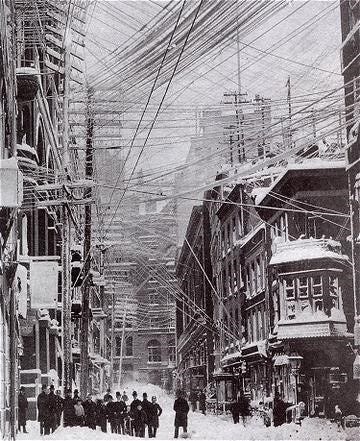

You have an externalization that results in a technological change that came in around the time of the Industrial Revolution. Just immediately after that, it moved further up the body where more and more it’s not the breathing or the digestive processes or the activity processes that are thrown outside, but more of the human capacity of logical thought, or connected thought. That has transformed itself into networks and everything that came along with networking technology — everything that went into laying the first telegraph lines under the sea, under the Atlantic Ocean, and everything that went into telephones and so on.

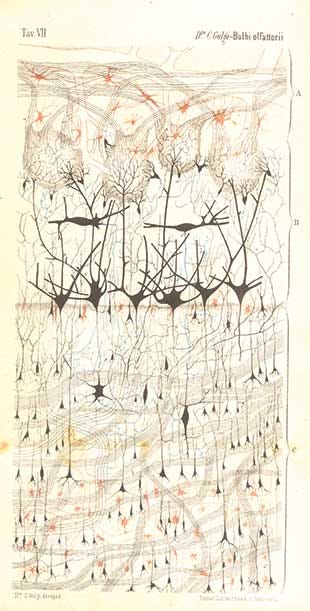

Each one of these became a representation of how we would normally start connecting one thing to another through using our own brain. It became an exterior manifestation of that. Wires everywhere are just like a web, in the same way that if you use a microscope to see the brain’s functions it looks like a web of neurons.

Once again, the same process repeats itself and we start thinking of the brain as a telephone connection system or a network system. Or in its most recent avatar, we see it as a computer system.

We have different waves of technological development kick in from time to time. In each layer, in each wave, you have a certain human capacity that we have put outside. In all of this, our unwillingness to do the thing put outside of ourselves, for whatever reason justified, unjustified, is what drives this. And once it’s out there, then we don’t have to do whatever the activity is by necessity. We don’t have to exert ourselves by necessity as we used to before. That is basically the transformation that has happened.

In the 20th and 21st centuries, we are looking at internal functions. We are not just looking at things that are more-or-less based on our physiology. Now we are moving to things that have more to do with the intangible: our life of imagination and creating images, wanting to imagine something, our love for stories, our love for meaning in life. All those things start kicking in and they become the technologies of television and image creation. They become the technology that goes into video games and the creation of virtual reality. That is where the meaning of life-stuff gets gamified. That is where that hunger and need goes.

The hunger for images goes into the world of television at least in the 20th century. Now, in the relentless crawl of videos everywhere, an ocean of it is present in the 21st century.

And then, of course, the most recent one is not only to have a need, to not only have a capacity inside ourselves, but also to relate to other people. That is where the fundamental hunger for social media comes in. Social media is where our capacity to imagine another person in their situation, in their circumstances, in their life path goes. That is a true connection we all hunger after. What we get kind of is a parody of it because like all technologies, it’s formed and constituted in a way so you don’t have to do it. You don’t have to remember what a friend is doing or thinking, or accomplishing in life, you can just look them up.

Same for everything that has to do with the sharing of information. You no longer have to struggle with communicating something, you just send a text. So these things come about because now we are looking at the social sphere and the higher human faculties which are getting involved in technologization. That’s what’s happening right now. We have gone through the full evolution that way.

Kristin: So in the case of a very simple tool like a telescope which enhances our ability to see, or even in the case of an engine or a computer (a powerful high-performance computer), couldn’t the argument be made that it actually enhances our capacity — whether it’s the ability to see, the ability to produce goods, move around, or the ability to think? Is it a bad thing?

Gopi: That is the fundamental principle of the lever, right? You have a lever, and you put very little effort and you are able to do a lot. And that motif, that archetypal motif of the lever, just cascades through all the world of technology. It doesn’t matter what corner, how advanced; it doesn’t matter.

The enhancement in the case of the lever is that you don’t have the same weight to lift, but you have to move it a greater distance. There is an offset, a certain offset, and that offset is where all of our participation actually kicks in. Now we have set up a situation where we don’t even need to push the lever all the way down. We can press a button or touch a screen. We brought our participation down to that level. Our activity is that of touching something, or waving a hand, or something like that. Since our participation is brought down to that level just by touching a screen, we are able to do a whole lot today in this world that earlier we were not able to do.

The offset is that our own internal capacity tends to atrophy if we neglect to pay attention to that offset. Just like if you got a car and you never walked — ever — you just stopped walking. When that lack of offset kicks in, there’s going to be a problem with something as blatantly physical as limb movement.

Our common sense has caught up after three centuries of using Industrial Revolution gadgets. We finally caught up there, but everything that has to do with inner work is still very far away from consciousness. We don’t even recognize that we need to be doing something there in the first place. The equivalent of walking with regards to inner attentiveness.

Think of physical therapy. The whole idea of physical therapy is once you lose a capacity, you learn to bring back that capacity. We are now all in that state. We don’t realize as far as inner functions go that we are losing capacities like muscle atrophy day in and day out, and its proceeding at a remarkable pace.

The first thing to do is create an equivalent to physical therapy because we need to be able to get off those crutches and onto our feet again. Depending on the kind of crutch we are trying to get off of, that also ends up enhancing our latent capacities because some of the crutches we pick up by default. It’s only when you try to throw them off that you build a capacity which you didn’t have before. So that is how we are supposed to make use of the technology. In relation to the offset, that little gap where we have to create the offset towards what the technology allows us to do, that is where we come in. That is the part that I think you are trying to bring attention to.

Kristin: Obviously for a long time there have been imaginations about the future and robots. We have the singularity movement, the technocratic movement, this almost fixation with the idea that AI will become sentient (whatever that might mean), and possibly actually evolve beyond humans beings or destroy human beings. If you google certain questions about AI, you will almost always see probably AI-generated questions that come up in the search that have to do with whether AI is going to destroy humanity. So, this is two questions. One is about the concepts that people unconsciously give over to this phenomenon. The other has to do with the language, the way we speak about it, and the words that are used — like intelligence, learning, reasoning, super intelligence — these kinds of things. Can you just talk about the way we think and speak about AI in relationship to what it actually is?

Gopi: The “what it actually is” part is important to address, but the right way. You have themes like the Sorcerer’s Apprentice. You have themes like Frankenstein, of Mary Shelley, where something is created that goes out of control.

And the “out of control” happens simply because we are not able to control it. It is a human lack, a lack of human capacity. That is what is driving these narratives or these thoughts — fixation or fascination — because deep inside we know we are not doing something, not being able to control something. These come out of that agitation of the mismatch between what our technological world is showing us and where we feel ourselves to be.

People try to bridge that mismatch in very different ways. Some say, “Okay, the mismatch is nothing to worry about. It’s natural. It’s going to happen as it always has. It’s just the way things are.” Others say, “It is going to crush us because fear kicks in and you have the imagination that kicks off — the Frankenstein imagination.” That’s the natural result of a very deep unconscious awareness of what we lack.

What happens in the midst of this fascination and this drive is also fueled by our laziness, which we shouldn’t forget about. We get pictures. Our own mind is giving us pictures of what we lack. What we are doing is taking that and plastering that over the machines. We are saying, “OK, we have to be more conscious, more aware. We have to be sentient of what we are doing.”

Translation: Machines will become sentient. They will be become more aware. So you have a huge projection situation because of this mismatch. Some people realize there is a mismatch, then they try to bridge the gap and a whole host of psychological features are unleashed on the world.

One way to understand the fantasy elements woven in when we make these predictions is to look at the leap between the basic level of just rocks and minerals and tech devices to a leaf, a blade of grass. We have not yet made one. We have not yet made a cell. Not one single cell. What’s happening is that we are looking at technological reality where we cannot make a leaf or cell or anything, not even the mere beginnings of living systems. Yet, we are fantasizing about creating things which are at the human level. Forget about the animal level. We are going straight to the human level, and we are saying: “Can they think? Can they feel? Can they do?”

It’s creating a huge leap which we don’t even face. We have crossed an abyss we don’t even realize we have crossed. That right there tells you there’s something missing. There is a connection, a line, a thread that has gone missing that then ends up leaving reality and enters the fantasy realm. The fantasy realm has also been heavily filled with what Hollywood has produced. There’s been no end of Terminator movies. No end of technological dystopian movies of all kinds which have been churned out of the human mind one way or another. All of that creates an effect. The right effect would be: “Hold on a minute. Something is off. How can we create a way to fill that gap that we are all sensing?” The other way is yield, succumb to it — or we just run away from it. Neither of these are in any way going to be helpful in offsetting that gap. We need active participation to offset the gap. It’s a continuous fight.

Kristin: In the past I have heard you talk about the language part of the picture. For me, that was really, really helpful.

Gopi: The language is part and parcel to this process when we start using human terms with machines. What are we actually seeing when we say, “The GPS tells me that I need to go from A to B this way”? In that sentence we have used the machine as a human being.

The problem is that it’s not entirely wrong. There is a background of hundreds and hundreds or thousands of software engineers who have all thought about a route from A to B and created a feedback loop for the machines to be able to do that. Or created the software to do that.

Their thoughts are lurking as shadows behind our use of the technology. But that’s what makes it possible. Every human being’s additional input makes it possible.

We are not entirely wrong when we say: “The GPS tells me.” But it would be more accurate to say: “A lot of people who have thought about going from one place to another through the medium of this specifically-designed software and hardware are letting us know that this is a possible route.” That’s what it really is, but we have made it a habit to leave out that hidden aspect of the human effort.

A lot of people have put effort in this. Ironically, the more effort they put, the lazier we get. This kind of effort that has been put in is what is communicating with you in the GPS. It is the net result of all of that. In our language, if we change our language or if we fill in the remaining part of the sentence in our mind when we say these things, then we are at least being in tune with what is actually happening.

Otherwise, again, the reality is we start losing touch with it. Before long, in the extreme cases like singularity imaginations and so on you have that detachment. Singularity is a good word because it’s basically what makes an individual a single individual. It’s like a center point of what we could call our individuality, or our ego. It’s THAT. And THAT is what people think the machines will replace. They kind of see that one-to-one. That is what is called singularity. In other words, there is no need in the way they imagine it for the human being to actually exercise their individuality. That one-to-one image is what they are seeing. So that’s how they describe these part-computer, part-humans, because of that fear. It’s that one-to-one link that’s been made.

Kristin: In the case of AI, is it accurate or not? If not, how so? Does one call it intelligence or say that it’s learning?

Gopi: Yeah, it is almost entirely inaccurate. In this case, when you say it is learning, what is happening? People who are looking at the programs are learning . . . how to program better. So, again, you have to go through the loop that puts the person back in there. What differentiates the current AI from the AI from fifty, sixty years ago is that all the activities that we have thrown online have now become input data streams for the program. Earlier you would have like ten numbers that you put in to enable the program to do something. Now you have terabytes of information flowing freely to these programming systems. What is happening when you say “learn” is the creation of a feedback loop.

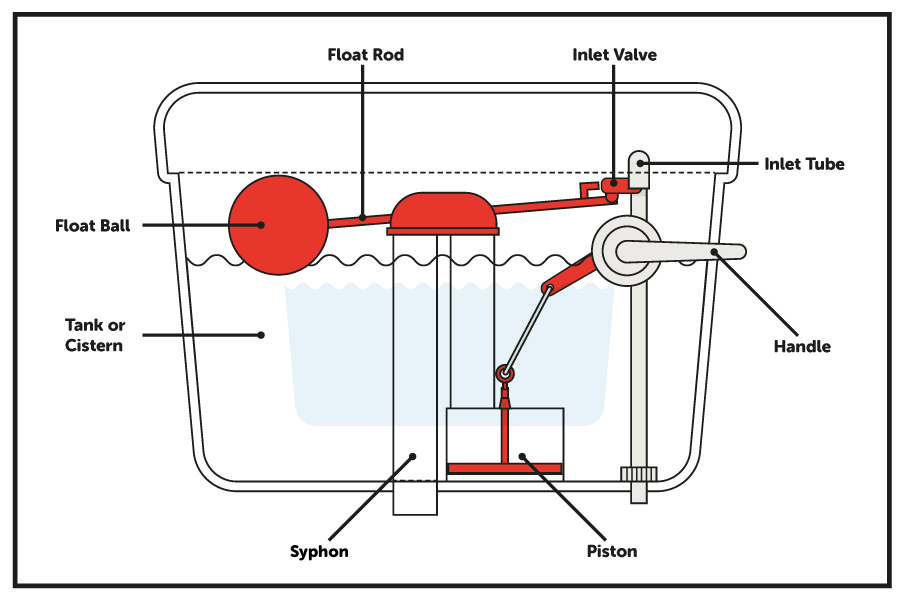

There are two kinds of feedback loops. One is mechanical, entirely mechanical, and the other is the mechanical being seen by a person and then tweaked by them. So let me give it to you in terms of a very simple example like a toilet flushing. A toilet flushing is technically a feedback loop because when you flush, the little ball kind of descends and opens up the conduit for the water to come in and the whole cabinet fills up with water. Once the ball comes to a certain point, it switches off the water. That is a mechanical feedback.

Would you say that the little water cabinet is learning to switch off after the water hits a certain level? Probably not, because what you have is just a different arrangement of dominos. So that’s one thing. Now suppose the flush doesn’t seem to be working right or works in a different way then you thought that it would. Then you go in and you open it up and figure out the chain, the link, the ball, and so on. You check the connections, maybe you tweak it, maybe you change the setting. That is you taking part in the process, so you are reprogramming that.

In the first case, the process is a closed loop. You have set it up in such a way that when you do X, the machine does Y. In the second case, there is an extra input. In this case, you think, OK, there is something different, and that’s your data. That’s what people pull in terabytes and terabytes of data streams for. The data informs them as to how to change the program, or informs them how to create that different feedback loop. It kind of looks like the program is learning. That’s where the two feedback loops get mixed.

When you mix up the capacity of providing the feedback loop of the toilet flush with the capacity of the human being to say “OK, I am going to change the program a little,” — when you combine those two, you have the present-day AI. So you can have an AI in the toilet flush, but we don’t think of it in those terms because it’s not as impressive. It gets more impressive when you start using the word AI. You bring in the word “intelligence” when the functions being done are those which we do with our minds. But we still don’t realize that whichever layer we are talking about, whether we are talking about pouring a jug of water or we are talking about contacting someone in India or China, the activity has only been made manifest once you have decided to materialize it in some way. That is what enables us to start talking about intelligence because we see more of our own human activities coming into play.

We start using those terms automatically. Whereas pouring a jug of water does not bring out a feeling of the greatest human activity. The difference is actually in the way we assess that capacity. That is why we use those particular terms. The danger is we start assigning a reality to it. That throws us off. False realities leads to fantasies.

To complete the answer to your question, people say these words: “learning,” “being intelligent,” and “answering.” Or in the most recent versions, you can get AI to write reports or write lawsuits. Every human capacity that we have seen as different professions now become things that we are trying to automate. This is just a singularity in a way of human capacities being transferred over step by step. We lose sight that it’s a human capacity by calling it different names and assigning it to the mechanical interface.

Kristin: It’s interesting. One particularly striking example of assigning a reality through language (through a lack of consciousness of use of language in this regard) is the robot, Sophia. Remember her? She was like an ambassador and a citizen for the UN. Apparently she shut down. I didn’t look into it, but I just came across a headline saying she died. I thought that was a particularly interesting use of language because, of course, she was never alive. So there is a real tendency to project these types of things.

Gopi: Alive? She was never human. You frame the discussion by using those words. It’s a technique of framing. You start using certain words, and people start thinking in those terms, and before long they are caught up in it because the language has trapped them.

“She helped me do this.” It is “she” first of all. You have Alexas and Siris being feminized. The GPS voice is most often feminized, so you have this fantasy of feminine robot secretaries going on which is feeding into everyone’s lives — your own personal secretary, technological secretary.

That again you see is a huge projection. It is an enormous projection. The more we lose sight of humanity and ourselves, the more we tend to project it outside. That is the universe’s way of correcting it, I guess. At least recognize things when you see it in the mirror. Don’t fall in love with it.

Kristin: So back to the feedback loop, the question of learning, and humans being involved in reprograming to create these further layers. There is this idea — I think a lot of people have it — that a machine learning AI, deep learning, or super intelligent AI is a runaway AI and doesn’t need human beings after a certain point to engage with it.

Humans beings engaging with AI can mean two things. I just want to make a distinction here. One is the programmers that originally set it up who may in some cases go back and tweak. Then the other possible way that human beings might engage is through the actual data collection that the machine would be harvesting in order to broaden its information storage.

This idea that a machine can be programmed to almost continually reprogram itself and doesn’t need human beings to come in and reprogram and tweak it is pretty strong in the collective psyche. Where in terms of the toilet flushing example and the feedback loop do these machines need humans to manage them? Is there a necessary upkeep for them? Or is it the case that some of these really powerful AIs are developing in some capacity on their own?

Gopi: When you say reprogram, let’s bring ourselves back to the water level in the toilet. Imagine the water level is the net result of your entire community’s decision about where the water level should be from moment to moment, from second to second.

Would you call that reprogramming? Or would you call that a continuous unconscious participation of everyone in the programming? That is actually what is going on. You have the participation of all people, not just the programmers. That is what those data streams are for. The programmers are setting it up so that when you click a button, you are reprograming something. So it’s just shifted from only being the programmers doing it, to the programmers setting it up so that everybody does it.

And that process is what is called “doing it by itself.” That’s what is happening. The reality is more people are involved, and more unconscious decisions to program are involved. But as far as the machine is concerned, it is still a programing. There is no consciousness issue there. If you press a button, you press a button, whether you know it knowingly that you are entering into a program, or unknowingly that you are feeding into a program, the button is still pressed. That is what is going on.

People say “It’s more powerful. It’s more this. It’s more that.” That is actually what is happening. It has only been possible now because there has been a way for you to continuously pool your entire community to figure out what level the water should be in your toilet. The technology has made it possible for them to send that info and for it to go into your toilet flush.

That’s the difference. That was not there before. It was not possible to make everyone a programmer, an unconscious programmer.

Now you have covered both sides. You have covered the conscious programmers. You have covered the unconscious programmers. The AI in that sense has and can encompass all of humanity. We wind up programming things that have to do with our “feeling life” — when we like something, when we dislike something. That goes into the settings that conscious programmers have put for our feeling life, ratings, and things like that. It goes in there. We provide the data. We are actually actively participating and telling it, “Do it this way.” Of course, then their programs kick in and say these are the inputs, these should be the outputs, and that then carries it forward.

As for Deep Learning, it is the same thing. With all the fancy words, that’s what they are doing. You are engaging the entire human globe’s activity to push forward the capacity of the programs.

Kristin: I remember probably twenty years ago, there was a lower-level AI project. It was a website you could go to and there was a video-looking game with a not very developed face. You could chat with this supposed AI.

Gopi: Eliza?

Kristin: No, it was a man, I think. I can’t remember. It was pretty short-lived because kind of soon after they launched it and it went live and the public was able to engage with it, it started getting really nasty — which is also kind of a pattern. They wound up shutting it down because it was cursing and threatening people. There was some interesting research done (I’m sure there’s a ton on this) that pointed out that the AI was just developing its responses directly in relationship to how it was being talked to by people. It was picking up the language and the intentions or motives of the communications. Then in turn giving those to people in response. It was clearly a reflection of the small community of people that decided to go engage with it.

So now, if we have a situation where these really powerful AIs are taking in information globally (not just from a small select community that might voluntarily go engage with one, but actually sucking in data on everyone), what does that mean as far as what the AL might generate? Because it is always going to be reflecting back to us what it’s taking from us in the first place, right?

Gopi: Correct. Even in your small community you have both your conscious decisions and your unconscious, more primitive impulses as well. That’s just part of being human. Once you start looking at huge numbers of people, then the lowest common denominator keeps dropping. That unconscious kind of reflection is what we are dealing with. In this case, we are dealing with the part of us that we, again, have not mirrored adequately. There is no way to control it, no way to restrict it when you become the unconscious programmer. We are dealing with right now the big shift between the conscious and the unconscious programmer. And everything that comes along with that transition of our unconscious feelings, our unconscious agitations and angers.

All of that is one set, but there is also the lack of consciousness in terms of how we participate because the systems are set up to do things without our knowing. Say, for example, you drive all over the place. Your license plate is picked up wherever you go. You’re not conscious of that happening, but that data is being collected.

You don’t even have to be conscious anymore at any level. You don’t even need to press a button in order to engage. Cameras take care of it for you. All kinds of surveillance devices take care of it for you. That’s what’s happening. We have switched over from a conscious engagement with the computer system to an unconscious engagement. That’s where the oppressive aspect comes in because we don’t have a choice. Meters are set up. Feedback loops are set up. You don’t even get your hand on the switch.

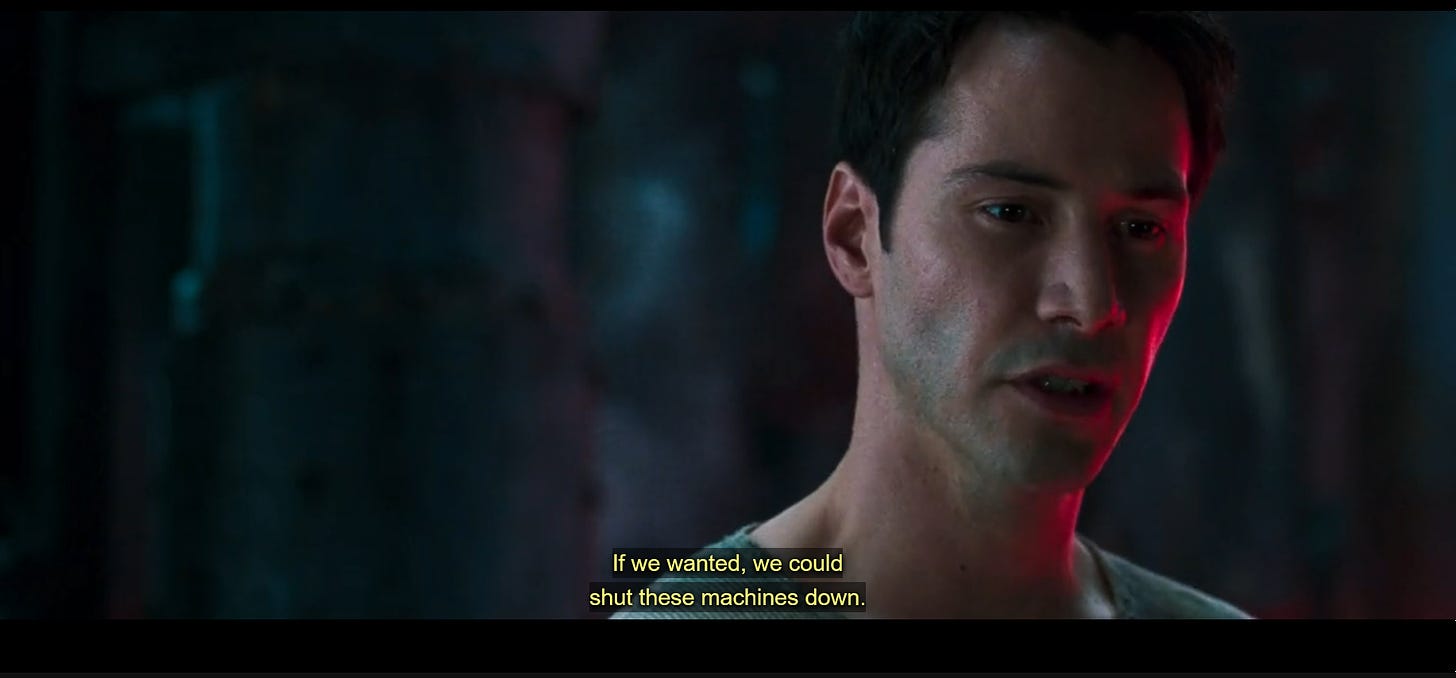

The switch thing also brings me back to the idea which has been promoted in movies like the Matrix where there’s the discussion between Neo and another person in the movie. They discuss how they can still turn the machines off, no matter how powerful they are until — and this is the point — until we ourselves set up a way to create a redundancy for power sources.

If we ourselves set it up so that when the power is missing from electricity, it takes in solar. When it is missing solar, it takes in something else. If we ourselves set those things up and we ourselves put the machine into a place where our switch is not required, then it runs away from us. We literally set the train on the track, on autopilot, and let it go. And then whatever happens, happens. That is the direction in which we are tending as far as these things are concerned. We are doing that not just for technical things like trains, but those things that are unconscious in us as well to kick in.

Whatever we have not resolved as issues in our own selves suddenly becomes a global data point and ends up programing the machines to reflect back to us what we did not want to deal with. That’s the human condition right now. We are not coming to our capacities on our own. We are being forced to look into the mirror that we ourselves have built. But there is a great reluctance to acknowledge that what we are seeing are parts of ourselves, and a fascination with wanting to make it something else.

Kristin: If AI really isn’t that powerful, actually, and really, it’s just a complex series of dominos set up so one falls hitting the next making it somewhat predictable, how come they are worth so much money? Obviously, it’s like a field at the forefront of the evolution of the world right now. It’s thought of as something that arms a country in such a way it makes them the most powerful country in the world, if they have the most powerful AI. So what is it that they are actually doing and capable of? I’ll just give you two examples, there is an AI called “Guardian” that is described as a nervous system. It’s used by the national security state and the Department of Defense in this country to . . . Let me find the language here. It’s a

“Digital nervous system for use in strategic, operational and tactical decisions at the speed of relevance.”

It produces modeling based on threats from without, and possible tactical needs to engage in some kind of defensive action before a threat even materializes. That’s one. The other is this InstaDeep AI, purchased a couple months ago by BioNTech for $681 million. This AI apparently during one of its funding rounds last year was used to predict how deadly one of the Omicron variants was. They used this machine to decide whether or not a variant was actually infectious and dangerous.

Gopi: To answer your first question, why is a set of dominos falling so hot? And why is it that it is at the forefront, cutting edge, and all that stuff? To extend the analogy I used earlier, it’s because now every human being can tweak which domino can fall in the sequence.

You’re enabling the audience. If you imagine setting up a set of dominos in front of an audience, you are enabling everyone to come in and each plunk a different domino in a different direction.

Now you can see that is a totally different ballgame, that kind of feedback. You still have the dominos, but you have a lot more people engaging with them. That’s what makes it a source of power.

For example, I was telling you earlier that it feeds on our laziness. A good example of that is . . . let us say something which is at the forefront of what people want, say an iPhone. If you drop the price by $300, you will see a surge of purchases rise up. Now, that’s power. That’s your lever right there. If you drop this, if you press this down — you may just press a button, or just tell someone and the price goes down. And then a large number of people act in a certain way.

When you have both of these things set up — a large number of people doing something allows you to make a decision and your decision in turn makes a large number of people do something — because of their own laziness tendencies and their own predictability, that combination is what makes it a formidable force because you are talking human civilization-level forces.

That’s where you are looking at special interests like the Department of Defense. Any of the people with military intentions, they can see this as something that can be weaponized. If human activity itself can be weaponized, there is no greater weapon than that, as far as we know. There is one level where you weaponize, say, the activity of a chemical in a weapon like guns or missiles that blows something up. There is another level where you may use the activity of the human mind as far as emotions go and create a riot. That is another level of activity. That is the psychological manipulation of people or of societies.

Now you are talking the next level. You’re talking about directing people as far as their thoughts are concerned. Most importantly as far as their attention is concerned because our attention is the one point from where all of our human activity begins. We attend to something and then we think about it. We feel something. Everything follows after we attend to something.

And that is the focus of the AI, because whatever we are attending to becomes part of the feedback loop. And even what we are not attending to is made part of the feedback loop. But the part that is weaponizable is our attention.

As layer upon layer of human faculty is engaged within this feedback loop, you wind up multiplying the power of the predictive system.

Why did it matter, for example, that the variant was predicted to be more or less dangerous? Why does it even matter?

It matters only because people think it matters. There is no other logical reason for it. Unless people actually thought that something popping up on a graph is relevant to them. Unless they attended to it and then aligned the rest of their activities to that, it is completely meaningless. But they have been trained to do this. Companies have been spending millions of hours creating charts and graphs in order to guide the board members’, the CEOs’ decisions, and every ship steerer’s decision.

This is how we have learned and habituated ourselves to respond. We have trained ourselves to respond to graphs and charts. In other words, what a machine spits out. In other words, we respond to that lever.

First, we have to establish that lever. If no one cares that an iPhone is $300 cheaper, you can drop the price all you want. The human link that gets missed is the predictability: Our tendencies of doing the same things all over again or liking the things that are most comfortable. Our love for comfort drives and fuels the power that is then given over to AI. It in turn gets taken over by large special interests and weaponized as much as they can.

The brute force involved in the technology and the power requirements make it so that those at the nation-state level are the ones who can play in that realm. Or larger financial conglomerates can play in that realm, not regular people. That is what differentiates the impact we can have in the world through machines versus the impact we can have in the world based on our own capacities.

Kristin: Apparently, the decision to do a “control burn” of the chemicals that were on the train cars that crashed in East Palestine, Ohio, came from modeling that was done by the Department of Defense. The governor of Ohio put out a press release (which I’ve seen), and apparently the state communicated with the National Guard and the DoD regarding what to do based on modeling.

Gopi: We saw that with the Covid crisis as well. Based on the modeling that came out of the UK and even out of Seattle, the lockdown procedures were adopted. Same idea.

Kristin: So this brings me to this other question. There is this conspiracy theory that there’s one extremely powerful uber AI running everything, making all the decisions about everything that’s taking place, or has taken place over the last three years. Seems like just another invitation to project and give power where there isn’t any or shouldn’t be. But on the other hand, it is true that quite a bit of power is being handed over to these machines if we allow them to spit out these models, or we could call them directives based on what humans choose to do with those models. But the issue is that, increasingly, isn’t it the case that human beings are choosing to take those, whatever the data is, as directives?

Gopi: Yeah, they’re using that to displace their own decision making. Once again, it’s a laziness at a totally different level. Because now you are looking at the process of making a decision for yourself and for millions of people being something you don’t want to do anymore. You want to outsource that. Of course if you want to outsource that you can roll the dice and do something and let the laws of mechanics take precedent.

Or you go through this alternative route. They have become the modern day oracles. That’s what it is, you know like the Oracles of Delphi where people would go and they would ask questions and get their responses. Here it has become a kind of substitute oracle, an artificial-intelligence oracle situation.

Of course it is fed by the exact opposite, at least the earlier oracles were in some way tied to some spiritual realities where they would look at the progress of people. Here, because it is more in the unconscious direction, they don’t have anything to do with the progress of people. That all feeds in and creates the model and people’s decisions. Unconscious decisions all get kind of congealed, and then that becomes something somebody in power decides to use as the basis for action and just cop out on their own decision-making capacities. That’s when we actually actively give over the reins to something we can’t control. We have chosen to do that. The more we choose not to choose (the more we choose to just abandon our decisions), the more it feeds the AI situation.

The kind of uber AI you are taking about has been also part of the description in Hollywood. I’m not sure if you are aware of the series called “Person of Interest”?

It’s a TV series by Jonathon Nolan that was put out, and in this precise thing is kind of seeded — this idea is seeded in — an AI that is basically hijacking every text message, every keystroke, every single audio and video across the city, and then comes to decisions. In one of the episodes called “Re-assortment,” it is interesting because the AI decides it wants the DNA of the entire population. As a result, it creates a virulent virus, then gets people lined up for vaccines.

Kristin: What’s the date of that show?

Gopi: Between 2010 and 2014, I think.

These sci-fi stories are introducing thoughts such that all you have to do is to be willing to give away another aspect of your thinking, and then you can actually create these scenarios. That’s what all of these sci-fi’s feed on. Imagine what the world would be like if one particular human activity is removed from it? And then automatically that leads to a series of consequences and that is what creates the dystopia. Or they create whatever kind of world comes out in fiction. It’s almost like we are mentally exploring all the different ways we can end up having a society when we don’t exert ourselves. That’s one of the reasons also why people find a lot of the science fiction coming into reality because the path of least resistance is still the path of least resistance. Whenever you take the path of not doing something, it’s easy to tell where that will lead. You don’t need a lot of brains to predict what will happen if a person is lazy.

But then you say, “Look, I knew what was going to happen. I predicted the future.” No, all you did was bank on the laziness and the reality that comes out of it. You put your bet on that. That’s what made you into the modern day prophet, the modern day oracle. So this is what is happening: We are creating a shadow oracle with its own prophets, with its own system, and people aren’t even aware of it.

Kristin: So to be clear, the machine itself is still just a series of dominos set up, but the way we engage with it allows it to act as a modern-day oracle.

Gopi: Exactly, it’s what we do with it and how we adapt and make decisions. How we use our capacity, and also how we respond to what is given. All of these things. These are actually the fuel of the AI. It has almost nothing to do in that sense with the technical capacity or technical progress of the machine. We could have very well done this kind of set-up even without the advancement of the semi-conductor size. And so on so forth. It just would have been very cumbersome and would have taken forever. So that would have been unfeasible only in practical terms, but theoretically, conceptually, it was still possible.

And what we have done now, we have made it a reality. We have decided to engage with the dominos in this particular way, and that is world of AI that we are having to handle.

Kristin: Over the last three years, I’ve spent a lot of time in social media spaces where the majority of the people in these spaces fled censorship. They were interested in truth around what was going on in the world. Also organizing some kind of resistance or dissent with regards to the lockdowns and the pandemic measures that were taking place.

So for that demographic that moved to social media, that was supposed to be private and secure and censorship-free, like Telegram, Gab, Parlor, or Truth Social. What I noticed was spaces where it was clear that data was being harvested. I’m not going into what those indications were, but there were a number of easy-to-identify flags that made clear data was being harvested on members of these groups. Then what I would see was a pattern of ideas, concepts, possible actions . . . especially if they involved the possibility of the outcome everyone wanted. I’ll give you an example, especially with parents and schools and masking. There was a series of different ideas.

One was affidavits. A few months later there was going after the bonds of the school board members. And then there was the next trend that came along. What I would see — and it was confirmed in real life because these ideas would also take root within my own community with people that I know, and in our own information sharing spaces, not just out there in the big social media spaces — there were trends, and everyone would get excited, like maybe this will be effective. So these trends would go viral, and then really quickly there would be sort of like a dragnet or a capture-space set up. In the case of bonds, I remember this website with related social media and branding cropped up right away called Bonds for the Win. It got promoted by a lot of the right-wing media apparatus people or influencers who can help inject the whole social media space quickly with a brand or with a concept. Then everyone, you would see it happen, everyone would be like, “Go to Bonds for the Win.”

If you want to do this bonds thing, go here, go here.” And you’d go and you’d fill out forms. Let’s just say that none of these trends were necessarily effective. There’s a couple different things to say about that, but the reason I’m bringing it up right now is because the pattern of harvesting data and monitoring potential trends in action from the dissenting community seemed to continually be responded to by that which could capture and mitigate the possible effectiveness of the action.

So there’s two possibilities. There’s a group of people in real time monitoring all the social media and tracking trends, and there’s a command center and someone says “Launch Bonds for the Win. Capture all these people.” Or you have a situation where data is being harvested, trends are sticking out in models, charts, and graphs. You have a machine that can actually spit out, “This is the trend. This is this week’s trend. This is this month’s trend. This is where parents are likely to pour their energy over the next two weeks.” Then possibly further directives, or you have a team of people, or a control center that can then act upon it.

I just wonder about that layer within the information-sharing space that works to closely monitor trends in order to mitigate the effectiveness of people. How do you understand, or do you at all, the role that AI plays there?

Gopi: I think the role AI plays there has got to be huge. All of these social media trial runs were not for nothing. The whole Twitter trending process where people dutifully hash-tagged everything and provided a way for media companies to learn how to predict and control the trends. Those techniques are not left aside unused. I mean everything adds on to the previous layer. That is the modus operandi of the technical development we are seeing.

If there are ten different AI processes, they all feed into each other. Or if there is one thing and there is a next thing, the next thing always builds on the previous, so both of these tendencies are happening with any AI. What you are seeing is that that combination is being effected maybe at the higher level, maybe at the lower lever, maybe at the local level, but the quality of it is almost exactly the same. When you look at certain trends, what are you doing? You are basically saying this is what people are attending to. Which is what we discussed earlier. It’s the weaponizing of people’s attention, bypassing their conscious decisions in a variety of subconscious ways.

So when you can take that, you can weaponize it in both ways. You can promote something that people can attend to, or you can suppress something that people are attending to.

Both those levers, or knobs, are within your field of operation at that point.

I don’t think there is any doubt that someone, somewhere is making a call. Whether it’s a continuous call, in terms of constantly looking at certain trends and making a human call at every point, or you program the AI to make a chart based on whether you make a call, a series of calls, or one single call combined because of a program, it doesn’t matter in terms of the idea. It’s still the same thing. You are still weaponizing attention. The fact that we have social media companies means we are entering a realm where the interest is different than that of communication. When you look at a company, a company’s primary interest is that of profit.

Whenever you look at a government entity, it winds up being the state nexus that is created. So you have two different interests. Neither are about communication. Communication just happens to be in that field.

When a company wants to set up activities in the communication space, they have to be given permission by the government to even incorporate in the first place. This means there’s always a back-and-forth where there’s a give-and-take between the government and private sector about how much data that comes in will be used for interests other than that of communication. Sooner or later, those interests conflict. When the government’s interests and the private interest conflict with that of communication, you have censorship.

This is how communication transforms itself. It morphs when it touches the governmental apparatus and governmental interests. It morphs into propaganda. It cannot help but do that.

That is as strong a law as the law of gravity. When communication intersects with governmental interests, you get propaganda. When communication intersects with profitable interests, you get marketing. And when you combine those two, then you have forced marketing.

That is what we are dealing with. In the space of artificial intelligence, both private and public sectors use the computing powers that they can access (which we spoke about earlier) which are resource-intensive devices. These are different types of weapons. These weapons require a lot of resources, and these are the entities that can have the resources.

We are caught in the crossfire. We are caught in these conflicts of interest. So censorship is par for the course. Our trends being manipulated is par for the course. Effectiveness being reduced in certain things is par for the course. Effectiveness being artificially enhanced in certain useless directions is still the same thing.

These off-ramps, or red-herrings as we call them, have been very effective now. The unconscious aspect that we spoke about a little while ago . . . that the more unconscious drives we have that are winding up in this feedback loop means it’s even easier to manipulate. That’s because now you have access to deeper impulses in people than you otherwise would have. You can create a mob with the press of a button. Not because everyone is in the same place, but because everybody feels this at a deeper level. So you have gained access to this layer of mob creation.

That is how things go viral. What is something going viral? It’s a mob, but on the internet. It’s an internet mob. That’s what it is.

The Ice Bucket challenge, why would something that ridiculous go viral? That was another test-run where you see what happens when all celebrities do the same thing. It can be the most meaningless thing, but that’s a trend to study to understand how you can steer a population in a certain way. And you can pose the question: What happens when all the celebrities do the same thing? Just give them something stupid to do, but it’s important they all do the same thing.

What kind of effect does it have on society? How far will it spread? And there you go. Once you understand how that terrain works, that landscape works, you are operating within the realm of human attention. In that realm, you are no longer in the realm of physics and weapons, you are operating in the realm of human attention. That’s the material through which you do and direct things. That’s how I see the AI interacting with those who wish to direct how very large groups of people move. It has been made possible by that tech.

Kristin: One of the things that I’ve been studying is cognitive warfare. It is already feeling like a tired term, but actually is so misused as a concept and a term. When I went and looked at the very few papers that exist that actually articulate and outline what it is, there is a wealth of information as far as I’m concerned regarding a lot of the weirdness of what we are dealing with out there in the world. I just want to point out a couple and get your thoughts on them. One has to do with truth and the other has to do with trust.

In the truth arena, of course, we’ve seen since Trump’s presidency this obsession with true and false information expressed in a variety of ways through the media, language, and terminology:

Truth seeker, fact checker, I know I don’t need to tell you. I know you understand what I’m talking about. There’s this amplification of what has existed for a long time. It is shaping multiple paradigms through the media — really dramatically increasing during Trump’s presidency to the point where there’s this chasm. You see two almost totally different realities co-existing, not well, in society in America,

Based on this divergent media, it was amazing for me from a sociological perspective to go back and forth between like right-wing and left-wing media at the time and try to empathize with people watching it.

I’ve had this bizarre sense or question about the nature of truth for the last few years, especially in relationship to, or let’s say, exclusively in the realm of information-sharing spaces via devices.

I’ve tried to articulate it and I’ve had a hard time, where I say, “The only way I can describe this is that it is both true and untrue at the same time.” I’m trying to further understand what I’m experiencing. Let say it’s a post on social media, and let’s say that the content in the post is true and the message is true (as far as I know). So there’s the content and then there is the medium, the vehicle, the packaging of the post. Then there’s the intent of sharing the post. There may be more, but those are the three layers I’ve identified, and I’ve asked myself: Do all three need to be true? And I think that they do.

Gopi: Yes, agreed. You may remember an old phrase: “The truth, the whole truth, and nothing but the truth.”

Kristin: Yeah, I do.

Gopi: That is where that comes from. There was an instinctive knowledge of all those layers having to be aligned correctly. So the truth — which is something like “x equals true” — the whole truth provides the context and the whole entire setting, the global setting, which changes from moment to moment where one truth can become false in a fraction of a second because a setting changes. So there is that. Then “nothing but the truth,” where you have the intention aspect of what you saying,

Is the intent the deception? As Shakespeare said in the Merchant of Venice, “The devil can quote the scripture for his purpose.” What you are dealing with is that. It’s the intent. It’s not the scripture. So all these layers are even tougher to deal with in today’s social media space because of the conflict of interest where the social media is born out of the desire to not exert yourself. These things you are asking people do to require exertion. They are working in opposite directions. That is why it is so difficult to establish this. It’s easier to establish when you are meeting people, going somewhere together, already on the train that involves exertion. That’s easier to achieve in that space. But the more you descend from human to human interaction, attentive interaction, you have issues. Even when you are in front of someone you can be lost in your own movie, just be waiting for the other person to finish saying something so you can say what you want. It can degenerate right there. It doesn’t require a text message. In fact, the text messages showed up in life because it had already degenerated over there.

You have that degeneration of truth happening from the initial human–to-human interaction all the way down, layer by layer — social media, texting, videoing — the whole landscape. The whole spectrum it goes into after that is all downhill from that point. It makes it more and more slippery to get activities going that move in the right direction. That what’s you are dealing with.

Kristin: I think it’s interesting, the example I was going to give is “Everyone needs to wake up.” So its a true statement, but then the use of “waking up” over the last three years didn’t feel to me like it would lead to an awakening.

Gopi: Yeah

Kristin: So you see this taking of true and potentially rather potent terms disseminated and in a way made meaningless or ineffective as a result of that. Like: “the lion,” “the warrior,” “my tribe.”

Gopi: Oh, that’s the worst, the “my tribe.” You’re combining the truth value with, again, the older and more primitive impulses that we have from our history. So you have “the true team and the false team.” It’s almost like sports teams at that point. It gets really primitive.

Kristin: “We are winning.”

Gopi: Yeah, and “wake up,” when it’s used as a term . . . . There’s no point telling a stove to warm up. What you have to do is put fuel and light and then it will warm. I think we are dealing with something very similar.

The words “wake up.” How? What way? What is the awakening process? What am I supposed to do with it? How am I supposed to do it?

None of that is in any way a part of that discussion. It’s more like “Press this button” and you’ll wake up. “Oh, wonderful!” So again we have that cross current of “Be lazy, then you’ll wake up.” Caught in that conflict, that is what we have struggled with for the last three years. You have all the terms. You have cognitive warfare. You have waking up. All the words in the vocabulary are all present, but because of the framing, because they are not aligned with actual reality and active developmental process in the person, they land into trouble.

The tussle you described earlier on, between “what is true, what is not true,” that is the Hegelian tussle. That’s what he tussled with. That’s how he was able to create his entire system of philosophy. The problem for us is that very precious treasure was taken up by those with an economic, materialistic mindset and injected into society, and kept out of every other field of human endeavor. That was the problem. Something as precious as the knowledge of interconversion of truth and lies gets weaponized. You are in trouble, and that is what we saw ramping up during Trump’s presidency. He throws all sorts of conflicting emotions into the social mix and inserts certain truths. It was almost to the point where people were like, OK, if Trump says it, it is true — or if Trump says it, it is not true. It got so personalized. It got entangled with the personality cult, a caesarean personality cult. Again, you are harking back to ancient Roman times.

That is what you are seeing. That is what was unleashed. You need a softening of the people first so that their truth compass gets all scrambled. And then you can throw in anything. As long as you make Trump say one thing, everybody goes either this way or that way. It actually becomes a rudder. You can use him as a rudder.

Kristin: I’m researching this “hacking the human being” and messing with the development of the cognitive process itself as a new, articulated form of warfare.

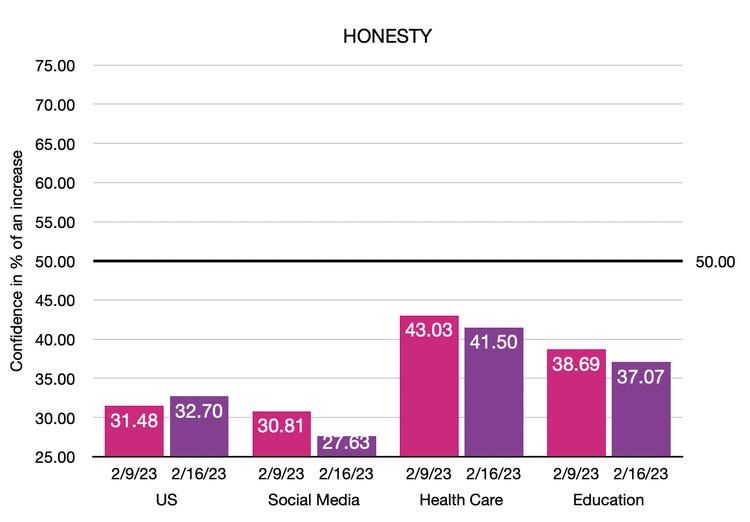

The company that won the NATO Innovation Lab Challenge October of last year, theyare going on now to develop some of the tools. I’m sure they have them already, but this is what is publicly available. This company is called Veriphix, and they are going to go on now and develop some of these tools for NATO. What they do, what Veriphix does, is it harvests a ton of data. It’s a data analytics company. But Instead of just demographic, you’re dealing with psychographic. You are dealing with belief systems. They want to try to target people down to the individual. To have enough data on every single person that you could be targeted individually. Which I can’t imagine is possible, but nonetheless, they’re moving on.

One of the things this company does is it graphs trust, because cognitive warfare is focused on trust. It’s focused on the decision-making process so that it can move people like you were describing. Either you love trump or you hate trump, but the point is you love or hate something so much, anything can be brought in proximity to it and you will predictably move toward it or away from it. This becomes a really, really powerful tool.

Those kinds of tools are what’s interesting right now to militaries in terms of being able to completely control societies. The way that this company graphs trust is in the following four areas. This is particularly interesting to me. Trust in government (that includes law enforcement), trust in social media itself, trust in health care, and trust in education.

They create a weekly graph. They say, “Due to this event and this event, trust in social media has . . . . Since Elon Musk has purchased Twitter, trust in social media within this demographic has gone up. Trust in social media in this demographic has gone way down. Since critical race theory was hot in the news this week, trust in education has plummeted. Since the announcement of possible bird flu vaccine development, trust in health care has gone down.”

And they map it. This is the thing that is so fascinating. The main weapon that has actually been articulated by NATO is leaks. Leaked information. Strategically leaking information as a form of warfare. They have a section in one of their papers called “false information not required.” Intentionally injecting false information into information spaces is considered information warfare. They are not even interested in messing with false information. They want to take true information and strategically leak it over a period of time. The word “dribbled” is used. When I read that I reflected over the last three years and the number of, what seemed like, strategically dribbled leaks: the Fauci files, the Twitter files. Now we have the lockdown files. The landscape is saturated with whistleblowers. We know a bunch of them aren’t even real whistleblowers.

The idea with the strategically dribbled true information is that it destabilizes society in general in relationship to the system the information is dropped in.

Gopi: What you are describing was already present in WikiLeaks. It was a limited hangout. That’s the limited hangout stuff. You throw in true information at certain points, in certain ways, in certain packaging, so that is has an effect you can handle and manage.

That is why they are focusing on that, because what is trust? When you trust something it is a measure of predictability. So you have a lever you can push to move people who trust in these institutions. That is the trust.

What happens when people do not trust an institution? Then you need to create individual heroes. What is the opposite of an institution? It’s an individual.

So you need control over that as well. You need two levers: one for those who trust and one for those who don’t. What we are talking about here as the individual is the leaking aspect.

Kristin: And your whistleblower, and your thought leader . . .

Gopi: Yeah, exactly. Your whistleblower process, your hero creation process, the individually-based thing. That is the lever there. That’s how the lack of trust as well as trust are both in your field of operation.

It makes sense, diabolical sense. It just tells you how critical of a resource human attention and action has become. The connection between human attention and action. What people attend to and what they act on. These are the levers.

What they feel is just a means to an end. These machines don’t really care what you actually feel. The feeling is just part of that lever process, to direct you to the action. It’s just a conduit to action.

None of these things have any independent value except for their predictability. That is the landscape we are having to deal with. That is what we saw unleashed in the last three years.

What I felt was it was the law of recapitulation. Before you do a new step, you have to redo all the previous steps. Just as every person goes through childhood before adulthood.

That’s what it felt like in the last three years. We just redid all the steps from the black plague in the Middle Ages to the present. It was just a fast-forward of the same thing: a medieval fear of disease, combined with the industrial process, combined with some other manipulation. And then the Pandora’s Box has exploded with all the manipulative things that have been done until then. And now we are dealing with the next stage.

And the descriptions you are giving, and the tussling with the truth, and all of that means we are, from Trump’s time to now, in that zone of recapitulating and advancing on to manipulating people on a huge scale.

Kristin: Gopi, just to end this conversation, what kind of advice would you give to people in light of everything we have talked about? Things people can do. What is within the realm of control in such a dystopian reality?

Gopi: Well I think the analogy of physical therapy kind of encapsulates it. You have to become in charge of your own physical therapy.

You have to be in charge of your thoughts and feelings to a greater and greater level. This means you bring that under scrutiny with the same ruthlessness that would bring somebody who is messing with you under scrutiny.

If it’s the lack of doing that, if it’s the lack of self-reflection, self-contemplation, and self-engagement — if that is the Achilles heel, then that is what we need to be working on. We have to be fully aware of this landscape, to not expect a bountiful harvest from a swamp. We have to understand which landscape gives what and work accordingly. Knowing these influences helps us place ourselves in the right landscape so we can actually navigate to the point where we can find the group of people you need, the group of activities that we need to focus on. All of these things are to be as consciously and independently decided as possible.

Is this my idea? Is it consciously placed”? Is it something that follows from what I am putting in my own mind? Or does it not? Is it a foreign influence of any kind? Really, we need to guard our own minds like a sanctuary, and be able to develop it.

I would elaborate on the metaphor of the physical therapy to the different layers. Are you able to hold back from something you really want to do? Are you able to initiate action in something you really don’t want to do? Are you able to hold your attention to things regardless of what rains down on you?

Are you able to do it every day? Are you able to do it continually? Are you able to do things forwards and backwards? There’s a reason there’s a phrase “knowing something forwards and backwards.” You actually have to know it forwards and backwards in time! Can you mentally run things forwards and backwards?

These are everyday terms that pack a lot of punch, and they can still get people to engage in the real waking up process. Look at all the machines as metaphors as to what we need to be working on in ourselves. If that’s what we learn from that, that’s the way to go forward.

Gopi Krishna is from Bangalore, India. He completed his undergraduate physics training from the Indian Institute of Technology Kanpur (India), and his Ph.D. in Physics (Solar Energy) from the University of Houston (USA) in 2014. He is currently engaged at Salt Lake City (USA) in the study of the Reciprocal System of Physics, a way to inculcate Goethean thought into modern physics.

More recently, Gopi Krishna has been volunteering with Utah Health Independence Alliance, including participating in the production of the documentary: "Utah: Safe and Effective."

The full version of the recently released documentary film, entitled “Utah: Safe & Effective?, is now available to stream free online. Feel free to watch and share it, particularly with medical professionals.

Wow. I'm astonished by this incredibly insightful interview. Thank you, Kristin, for posting this. You both explore so many levels of this topic that it covers a wide spectrum. My one disagreement would be Gopi's comment on waking up. While the point he makes is relevant, I feel he misses the mark. Yet I had to chuckle at his closing remarks, which sounded a lot like waking up, to me.

Earlier in the interview, he stated: ‘Whatever we have not resolved as issues in our own selves suddenly becomes a global data point and ends up programing the machines to reflect back to us what we did not want to deal with.' This sounds like a call to wake up as well. Dealing with our unresolved issues is one of the definitions of waking up.

But I feel the greater issue here is waking up to the deceptions we have endured the past three years. Which in turn triggers a waking up to the deceptions retroactively. The dominoes start to fall backwards, the veil gets drawn and we see our current affairs in an entirely new light. The social media parlance of being 'red-pilled' (as in The Matrix) I feel is an accurate metaphor. I personally have been red-pilled countless times over the past three years to the point where my entire worldview and historical view have shifted drastically. Even my own self reference and identity has changed, much like Neo. I would call this waking up.

As for the Great Awakening, which I think Gopi was referring to in his comment, that is open to interpretation. But when enough people have individually woken up to the deceptions wrought upon them it can, hopefully will, reach critical mass and a push back will gain much more traction.

Your interview I would deem a prime example of material by which to 'wake up'.

Kristin, thank you for directing me to this post. I just finished reading it, and as I got to the bottom I clearly saw echoes to my new blog - a title I actually came up with a year ago but kept putting off actually fleshing out my thoughts to begin posting articles. I DO see this as ,,,Wrestling with Truth!

I will digest my notes & add post some comments on my Substack, with a link back to this article. There is a LOT of good in here, and some things I might take issue with or further elaborate upon.